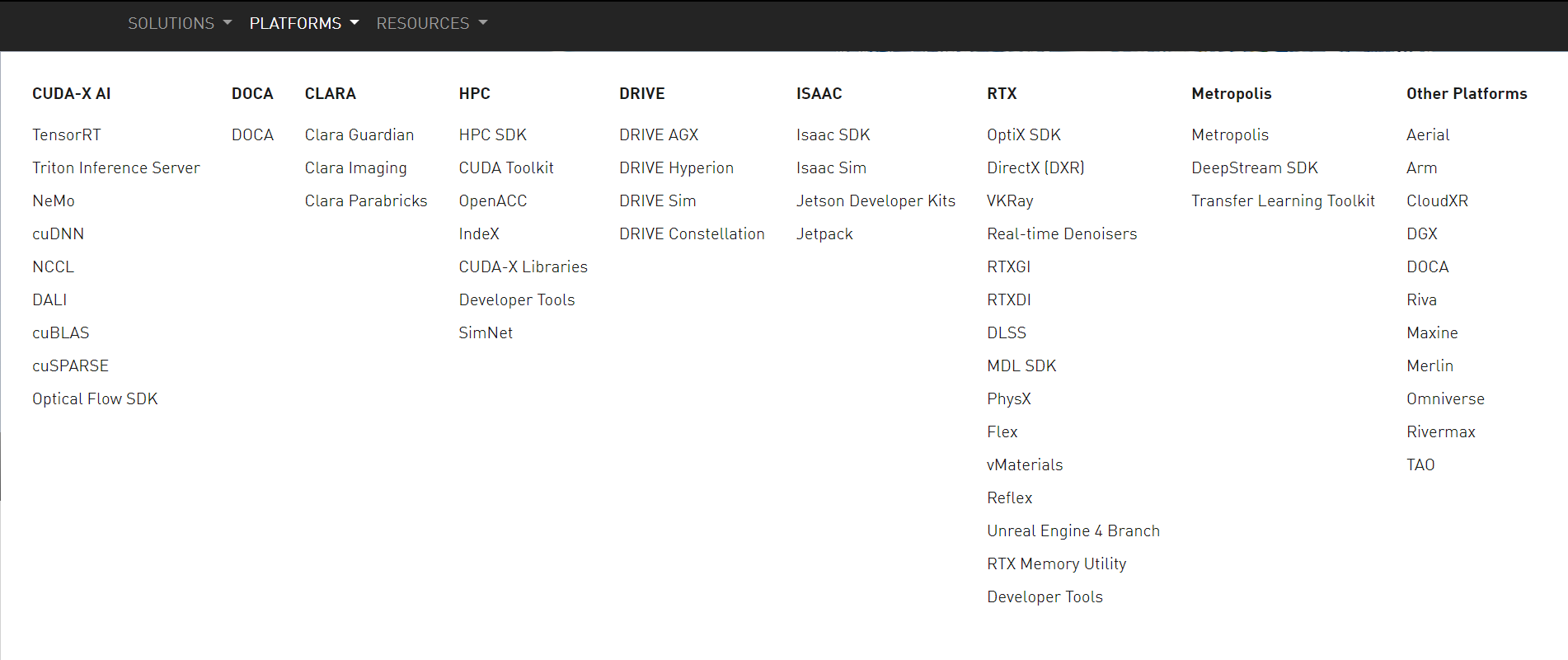

下图是官网的截图,将按照如下图的分类,进行学习。

CUDA-X AI

TensorRT

NVIDIA® TensorRT™ is an SDK for high-performance deep learning inference. It includes a deep learning inference optimizer and runtime that delivers low latency and high throughput for deep learning inference applications.

TensorRT-based applications perform up to 40X faster than CPU-only platforms during inference. With TensorRT, you can optimize neural network models trained in all major frameworks, calibrate for lower precision with high accuracy, and deploy to hyperscale data centers, embedded, or automotive product platforms.

TensorRT is built on CUDA®, NVIDIA’s parallel programming model, and enables you to optimize inference leveraging libraries, development tools, and technologies in CUDA-X™ for artificial intelligence, autonomous machines, high-performance computing, and graphics. With new NVIDIA Ampere Architecture GPUs, TensorRT also leverages sparse tensor cores providing an additional performance boost.

TensorRT provides INT8 and FP16 optimizations for production deployments of deep learning inference applications such as video streaming, speech recognition, recommendation, fraud detection, and natural language processing. Reduced precision inference significantly reduces application latency, which is a requirement for many real-time services, as well as autonomous and embedded applications.

With TensorRT, developers can focus on creating novel AI-powered applications rather than performance tuning for inference deployment.

Triton Inference Server

NVIDIA Triton™ Inference Server simplifies the deployment of AI models at scale in production. Open-source inference serving software, it lets teams deploy trained AI models from any framework (TensorFlow, NVIDIA® TensorRT®, PyTorch, ONNX Runtime, or custom) from local storage or cloud platform on any GPU- or CPU-based infrastructure (cloud, data center, or edge).

NeMo

NVIDIA NeMo™ is an open-source toolkit for researchers developing state-of-the-art conversational AI models.

Building state-of-the-art conversational AI models requires researchers to quickly experiment with novel network architectures. This means going through the complex and time-consuming process of modifying multiple networks and verifying compatibility across inputs, outputs, and data pre-processing layers.

NVIDIA NeMo is a Python toolkit for building, training, and fine-tuning GPU-accelerated conversational AI models using a simple interface. Using NeMo, researchers can build state-of-the-art conversational AI models using easy-to-use application programming interfaces (APIs). NeMo runs mixed precision compute using Tensor Cores in NVIDIA GPUs and can scale up to multiple GPUs easily to deliver the highest training performance possible.

NeMo is used to build models for real-time automated speech recognition (ASR), natural language processing (NLP), and text-to-speech (TTS) applications such as video call transcriptions, intelligent video assistants, and automated call center support across healthcare, finance, retail, and telecommunications.

cuDNN

The NVIDIA CUDA® Deep Neural Network library (cuDNN) is a GPU-accelerated library of primitives for deep neural networks. cuDNN provides highly tuned implementations for standard routines such as forward and backward convolution, pooling, normalization, and activation layers.

Deep learning researchers and framework developers worldwide rely on cuDNN for high-performance GPU acceleration. It allows them to focus on training neural networks and developing software applications rather than spending time on low-level GPU performance tuning. cuDNN accelerates widely used deep learning frameworks, including Caffe2, Chainer, Keras, MATLAB, MxNet, PaddlePaddle, PyTorch, and TensorFlow. For access to NVIDIA optimized deep learning framework containers that have cuDNN integrated into frameworks, visit NVIDIA GPU CLOUD to learn more and get started.

NCCL

The NVIDIA Collective Communication Library (NCCL) implements multi-GPU and multi-node communication primitives optimized for NVIDIA GPUs and Networking. NCCL provides routines such as all-gather, all-reduce, broadcast, reduce, reduce-scatter as well as point-to-point send and receive that are optimized to achieve high bandwidth and low latency over PCIe and NVLink high-speed interconnects within a node and over NVIDIA Mellanox Network across nodes.

Leading deep learning frameworks such as Caffe2, Chainer, MxNet, PyTorch and TensorFlow have integrated NCCL to accelerate deep learning training on multi-GPU multi-node systems.

NCCL is available for download as part of the NVIDIA HPC SDK and as a separate package for Ubuntu and Red Hat.

DALI

The NVIDIA Data Loading Library (DALI) is a portable, open source library for decoding and augmenting images,videos and speech to accelerate deep learning applications. DALI reduces latency and training time, mitigating bottlenecks, by overlapping training and pre-processing. It provides a drop-in replacement for built in data loaders and data iterators in popular deep learning frameworks for easy integration or retargeting to different frameworks.

Training neural networks with images requires developers to first normalize those images. Moreover images are often compressed to save on storage. Developers have therefore built multi-stage data processing pipelines that include loading, decoding, cropping, resizing, and many other augmentation operators. These data processing pipelines, which are currently executed on the CPU, have become a bottleneck, limiting overall throughput.

DALI is a high performance alternative to built-in data loaders and data iterators. Developers can now run their data processing pipelines on the GPU, reducing the total time it takes to train a neural network. Data processing pipelines implemented using DALI are portable because they can easily be retargeted to TensorFlow, PyTorch and MXNet.

cuBLAS

The cuBLAS Library provides a GPU-accelerated implementation of the basic linear algebra subroutines (BLAS). cuBLAS accelerates AI and HPC applications with drop-in industry standard BLAS APIs highly optimized for NVIDIA GPUs. The cuBLAS library contains extensions for batched operations, execution across multiple GPUs, and mixed and low precision execution. Using cuBLAS, applications automatically benefit from regular performance improvements and new GPU architectures. The cuBLAS library is included in both the NVIDIA HPC SDK and the CUDA Toolkit.

cuSPARSE

The cuSPARSE library provides GPU-accelerated basic linear algebra subroutines for sparse matrices that perform significantly faster than CPU-only alternatives. It provides functionality that can be used to build GPU accelerated solvers. cuSPARSE is widely used by engineers and scientists working on applications such as machine learning, computational fluid dynamics, seismic exploration and computational sciences. Using cuSPARSE, applications automatically benefit from regular performance improvements and new GPU architectures. The cuSPARSE library is included in both the NVIDIA HPC SDK and the CUDA Toolkit.

Optical Flow SDK

Optical Flow SDK exposes the latest hardware capability of Turing and Ampere GPUs dedicated to computing the relative motion of pixels between images. The hardware uses sophisticated algorithms to yield highly accurate flow vectors, which are robust to frame-to-frame intensity variations, and track the true object motion.

DOCA

DOCA

Data Center Infrastructure-on-a-Chip Architecture

The NVIDIA® DOCA™ SDK (software development kit) enables developers to rapidly create applications and services on top of NVIDIA BlueField® data processing units (DPUs), leveraging industry-standard APIs.

With DOCA, program the data center infrastructure of tomorrow by creating high-performance, software-defined, cloud-native, DPU-accelerated services to address the increasing performance and security demands of modern data centers.

CLARA

Clara Guardian

NVIDIA Clara™ Guardian is an application framework and partner ecosystem that simplifies the development and deployment of smart sensors with multimodal AI, anywhere in a healthcare facility. With a diverse set of pre-trained models, reference applications, and fleet management solutions, developers can build solutions faster—bringing AI to healthcare facilities and improving patient care.

Clara Imaging

Today, GPUs are found in almost all imaging modalities, including CT, MRI, x-ray, and ultrasound - bringing compute capabilities to the edge devices. With the boom of deep learning research in medical imaging, more efficient and improved approaches are being developed to enable AI-assisted workflows.

To develop these AI capable applications, the data needs to be made AI-ready. NVIDIA Clara’s AI-Assisted Annotation does so by providing APIs and a toolkit to bring AI-assisted annotation capabilities to any medical viewer. Post annotation, data scientists and researchers need to build a robust AI model. To enable this, NVIDIA Clara Train includes techniques like AutoML, privacy-preserving federated learning and Transfer Learning. One trained AI model is available in Clara Deploy and provides a reference framework to take an AI model and write an application workflow around it to enable interfacing in a hospital like environment. Clara Deploy includes platform capabilities required to support multi-AI, multi-domain workflows in a seamless manner.

Clara Parabricks

NVIDIA Clara™ Parabricks is a computational framework supporting genomics applications from DNA to RNA. It employs NVIDIA’s CUDA, HPC, AI, and data analytics stacks to build GPU accelerated libraries, pipelines, and reference application workflows for primary, secondary, and tertiary analysis. Clara Parabricks is a complete portfolio of off-the-shelf solutions coupled with a toolkit to support new application development to address the needs of genomic labs.

HPC

HPC SDK

A Comprehensive Suite of Compilers, Libraries and Tools for HPC

The NVIDIA HPC Software Development Kit (SDK) includes the proven compilers, libraries and software tools essential to maximizing developer productivity and the performance and portability of HPC applications.

The NVIDIA HPC SDK C, C++, and Fortran compilers support GPU acceleration of HPC modeling and simulation applications with standard C++ and Fortran, OpenACC® directives, and CUDA®. GPU-accelerated math libraries maximize performance on common HPC algorithms, and optimized communications libraries enable standards-based multi-GPU and scalable systems programming. Performance profiling and debugging tools simplify porting and optimization of HPC applications, and containerization tools enable easy deployment on-premises or in the cloud. With support for NVIDIA GPUs and Arm, OpenPOWER, or x86-64 CPUs running Linux, the HPC SDK provides the tools you need to build NVIDIA GPU-accelerated HPC applications.

CUDA Toolkit

The NVIDIA® CUDA® Toolkit provides a development environment for creating high performance GPU-accelerated applications. With the CUDA Toolkit, you can develop, optimize, and deploy your applications on GPU-accelerated embedded systems, desktop workstations, enterprise data centers, cloud-based platforms and HPC supercomputers. The toolkit includes GPU-accelerated libraries, debugging and optimization tools, a C/C++ compiler, and a runtime library to build and deploy your application on major architectures including x86, Arm and POWER.

Using built-in capabilities for distributing computations across multi-GPU configurations, scientists and researchers can develop applications that scale from single GPU workstations to cloud installations with thousands of GPUs.

OpenACC

OpenACC Directives

Accelerated computing is fueling some of the most exciting scientific discoveries today. For scientists and researchers seeking faster application performance, OpenACC is a directive-based programming model designed to provide a simple yet powerful approach to accelerators without significant programming effort. With OpenACC, a single version of the source code will deliver performance portability across the platforms.

IndeX

NVIDIA IndeX is a 3D volumetric interactive visualization SDK that allows scientists and researchers to visualize and interact with massive data sets, make real-time modifications, and navigate to the most pertinent parts of the data, all in real-time, to gather better insights faster. IndeX leverages GPU clusters for scalable, real-time, visualization and computing of multi-valued volumetric data together with embedded geometry data.

CUDA-X Libraries

NVIDIA® CUDA-X, built on top of NVIDIA CUDA®, is a collection of libraries, tools, and technologies that deliver dramatically higher performance—compared to CPU-only alternatives— across multiple application domains, from artificial intelligence (AI) to high performance computing (HPC).

NVIDIA libraries run everywhere from resource-constrained IoT devices, to self-driving cars, to the largest supercomputers on the planet. As a result, you get highly-optimized implementations of an ever-expanding set of algorithms. Whether you’re building a new application or accelerating an existing application, NVIDIA libraries provide the easiest way to get started with GPU acceleration.

Developer Tools

NVIDIA Developer Tools are a collection of applications, spanning desktop and mobile targets, which enable developers to build, debug, profile, and develop class-leading and cutting-edge software that utilizes the latest visual computing hardware from NVIDIA.

SimNet

AI-Accelerated Simulation Toolkit

Simulations are pervasive in science and engineering. They are computationally expensive and don't easily accommodate measured data coming from sources such as sensors or cameras. NVIDIA SimNet™ is a physics-informed neural network (PINNs) toolkit, which addresses these challenges using AI and physics. Whether you're looking to get started with AI-driven physics simulations or working on complex nonlinear physics problems, NVIDIA SimNet is your toolkit for solving forward, inverse, or data assimilation problems.

DRIVE

DRIVE AGX

The NVIDIA DRIVE® AGX Developer Kit provides the hardware, software, and sample applications needed for the development of production level autonomous vehicles (AV). The NVIDIA DRIVE AGX System is built on production auto-grade silicon, features an open software framework, and has a large ecosystem of automotive partners (including auto grade sensor vendors, automotive Tier 1 suppliers) to choose from.

DRIVE Hyperion

NVIDIA DRIVE Hyperion™ is a reference architecture for NVIDIA’s Level 2+ autonomy solution consisting of a complete sensor suite and AI computing platform, along with the full software stack for autonomous driving, driver monitoring, and visualization. The DRIVE Hyperion Developer kit can be integrated into a test vehicle, enabling AV developers to develop, evaluate, and validate AV technology. Software is updated to DRIVE Hyperion using the NVIDIA DRIVE OTA over-the-air update infrastructure and services.

DRIVE Sim

NVIDIA DRIVE Sim™ is an end-to-end simulation platform, architected from the ground up to run large-scale, physically accurate multi-sensor simulation. It's open, scalable, modular and supports AV development and validation from concept to deployment, improving developer productivity and accelerating time to market.

DRIVE Constellation

NVIDIA DRIVE Constellation™ is a dedicated data center platform for AV hardware-in-the-loop (HIL) simulation at scale. It runs NVIDIA DRIVE Sim™ for the core simulation engine and tests on the same AV hardware used in the vehicle to support bit- and timing-accurate AV validation.

DRIVE Constellation is comprised of two side-by-side servers:

The first server — DRIVE Constellation Simulator — uses NVIDIA GPUs running DRIVE Sim software to simulate the virtual world. The simulator generates the sensor output from the virtual car driving in a virtual world.

The second server — DRIVE Constellation Vehicle — contains the autonomous vehicle target computer that processes the simulated sensor data and feeds driving decisions back to the DRIVE Constellation Simulator.

ISAAC

Isaac SDK

Build and deploy commercial-grade, AI-powered robots. The NVIDIA Isaac SDK™ is a toolkit that includes building blocks and tools that accelerate robot developments that require the increased perception and navigation features enabled by AI.

Isaac Sim

NVIDIA Isaac Sim, powered by Omniverse, is a scalable robotics simulation application and synthetic data generation tool that powers photorealistic, physically-accurate virtual environments to develop, test, and manage AI-based robots.

Jetson Developer Kits

NVIDIA® Jetson™ brings accelerated AI performance to the Edge in a power-efficient and compact form factor. Together with NVIDIA JetPack™ SDK, these Jetson modules open the door for you to develop and deploy innovative products across all industries.

Jetpack

NVIDIA JetPack SDK is the most comprehensive solution for building end-to-end accelerated AI applications. All Jetson modules and developer kits are supported by JetPack SDK.

JetPack SDK includes the Jetson Linux Driver Package (L4T) with Linux operating system and CUDA-X accelerated libraries and APIs for Deep Learning, Computer Vision, Accelerated Computing and Multimedia. It also includes samples, documentation, and developer tools for both host computer and developer kit, and supports higher level SDKs such as DeepStream for streaming video analytics and Isaac for robotics.

RTX

OPtiX SDK

NVIDIA OPTIX™ RAY TRACING ENGINE

An application framework for achieving optimal ray tracing performance on the GPU. It provides a simple, recursive, and flexible pipeline for accelerating ray tracing algorithms. Bring the power of NVIDIA GPUs to your ray tracing applications with programmable intersection, ray generation, and shading.

DirectX[DXR]

DIRECTX 12 ULTIMATE

DirectX 12 Ultimate is Microsoft’s latest graphics API, which codifies NVIDIA RTX’s innovative technologies first introduced in 2018, as the cross-platform standard for next-generation, real-time graphics. It offers APIs for Ray Tracing, Variable Rate Shading, Mesh Shading, Sampler Feedback, and more, enabling developers to implement cinema-quality reflections, shadows, and lighting in games and real-time applications. With the DirectX 12 Agility SDK, developers can get the latest ray tracing technologies and graphics API’s immediately on any Windows 10 version November 2019 and newer.

VKRay

Real-time Denoisers

The NVIDIA Real-Time Denoisers (NRD) are a spatio-temporal API agnostic denoising library that’s designed to work with low ray per pixel signals. It uses input signals and environmental conditions to deliver results comparable to ground truth images.

RTXGI

RTX Global Illumination (RTXGI)

Leveraging the power of ray tracing, the RTX Global Illumination SDK provides scalable solutions to compute multi-bounce indirect lighting without bake times, light leaks, or expensive per-frame costs. RTXGI is supported on any DXR-enabled GPU, and is an ideal starting point to bring the benefits of ray tracing to your existing tools, knowledge, and capabilities.

RTXDI

RTX Direct Illumination (RTXDI)

Imagine adding millions of dynamic lights to your game environments without worrying about performance or resource constraints. RTXDI makes this possible while rendering in real time.

Geometry of any shape can now emit light and cast appropriate shadows: Tiny LEDs. Times Square billboards. Even exploding fireballs. RTXDI easily incorporates lighting from user-generated models. And all of these lights can move freely and dynamically.

DLSS

NVIDIA DLSS is a new and improved deep learning neural network that boosts frame rates and generates beautiful, sharp images for your games. It gives you the performance headroom to maximize ray tracing settings and increase output resolution. DLSS is powered by dedicated AI processors on RTX GPUs called Tensor Cores.

MDL SDK

Enables quick integration of physically-based materials into rendering applications

The NVIDIA MDL SDK is a set of tools to integrate MDL support into rendering applications. It contains components for loading, inspecting, editing of material definitions as well as compiling MDL functions to GLSL, HLSL, Native x86, PTX and LLVM-IR. With the NVIDIA MDL SDK, any physically based renderer can easily add support for MDL and join the MDL eco-system.

The NVIDIA Material Definition Language (MDL) is a programming language for defining physically based materials for rendering. A rich vocabulary of material building blocks based on bidirectional scattering distribution functions (bsdf) allows creation of a wide range of physical materials such as woods, fabrics, translucent plastics and more. The language is flexible enough to allow applications to add support for popular material models without additional changes in a renderers core shading code, examples would be the Epic’s Unreal physical material model or the material model used for in X-Rite’s SVBRDF model. MDL is defined in a way that it's abstract enough to allow renderers of various architectures to support it. A C-like language for defining texturing functions allows the implementation of custom texturing workflows, texture projections and procedural textures.

PhysX

NVIDIA PhysX is a scalable multi-platform physics simulation solution supporting a wide range of devices, from smartphones to high-end multicore CPUs and GPUs.

The powerful SDK brings high-performance and precision accuracy to industrial simulation use cases from traditional VFX and game development workflows, to highm-fidelity robotics, medical simulation, and scientific visualization applications.

Flex

FleX is a particle based simulation technique for real-time visual effects.Traditionally, visual effects are made using a combination of elements created using specialized solvers for rigid bodies, fluids, clothing, etc. Because FleX uses a unified particle representation for all object types, it enables new effects where different simulated substances can interact with each other seamlessly. Such unified physics solvers are a staple of the offline computer graphics world, where tools such as Autodesk Maya's nCloth, and Softimage's Lagoa are widely used. The goal for FleX is to use the power of GPUs to bring the capabilities of these offline applications to real-time computer graphics.

vMaterials

NVIDIA vMaterials are a curated collection of MDL materials and lights representing common real world materials used in design and AEC workflows. Integrating the Iray or MDL SDK quickly brings a library of hundreds of ready to use materials to your application without writing shaders. The materials are built with a consistent scale so designers can easily switch from material to material without needing to re-adjust scale. The layering capabilities of MDL make quick work of changing or enhancing the materials to get just the look that’s needed. Since vMaterials are built on MDL, they can easily be saved and opened in other supporting applications. NVIDIA vMaterials make it easy to provide your customers a wide range of materials quickly and easily.

Reflex

The NVIDIA Reflex SDK allows game developers to implement a low latency mode that aligns game engine work to complete just-in-time for rendering, eliminating the GPU render queue and reducing CPU back pressure in GPU-bound scenarios. As a developer, System Latency (click-to-display) can be one of the hardest metrics to optimize for. In addition to latency reduction functions, the SDK also features measurement markers to calculate both Game and Render Latency - great for debugging and visualizing in-game performance counters.

Unreal Engine 4 Branch

World’s most open and advanced real-time 3D creation platform. Continuously evolving to serve not only its original purpose as a state-of-the-art games engine, today it gives creators across industries the freedom and control to deliver cutting-edge content, interactive experiences, and immersive virtual worlds.

RTX Memory Utility

RTXMU combines both compaction and suballocation techniques to optimize and reduce memory consumption of acceleration structures for any DXR or Vulkan Ray Tracing application.

Developer Tools

NVIDIA Developer Tools are a collection of applications, spanning desktop and mobile targets, which enable developers to build, debug, profile, and develop class-leading and cutting-edge software that utilizes the latest visual computing hardware from NVIDIA.

Metropolis

Metropolis

An application framework that simplifies the development, deployment and scale of AI-enabled video analytics applications from edge to cloud.

DeepStream SDK

Build and deploy AI-powered Intelligent Video Analytics apps and services. DeepStream offers a multi-platform scalable framework with TLS security to deploy on the edge and connect to any cloud.

Transfer Learning Toolkit

Creating an AI/ML model from scratch to solve a business problem is capital intensive and time consuming. Transfer learning is a popular technique that can be used to extract learned features from an existing neural network model to a new one. The NVIDIA Transfer Learning Toolkit (TLT) is the AI toolkit that abstracts away the AI/DL framework complexity and leverages high quality pre-trained models to enable you to build production quality models faster with only a fraction of data required.

A toolkit for anyone building AI apps and services, TLT helps reduce costs associated with large scale data collection, labeling, and eliminates the burden of training AI/ML models from scratch.

With TLT, you can use NVIDIA’s production quality pre-trained models and deploy as is or apply minimal fine-tuning for various computer vision and conversational AI use-cases. TLT is a core component of NVIDIA's Train, Adapt and Optimize (TAO) platform, a UI-based, guided workflow for creating AI

Other Platforms

Aerial

Build and Deploy GPU-Accelerated 5G Virtual Radio Access Networks (vRAN)

NVIDIA Aerial is an Application framework for building high performance, software-defined, cloud-native 5G applications to address increasing consumer demand. Optimize your results with parallel processing on GPU for baseband signals and data flow.

Arm

NVIDIA’s accelerated computing platform is used to help solve the world’s most challenging computational problems. Today, NVIDIA GPUs not only power some of the world’s largest supercomputers and cloud data centers; they also power edge devices across industries. NVIDIA is expanding support for longtime partner Arm to open new opportunities for developers across an already vibrant ecosystem.

CloudXR

CloudXR is NVIDIA's solution for streaming virtual reality (VR), augmented reality (AR), and mixed reality (MR) content from any OpenVR XR application on a remote server—cloud, data center, or edge. The CloudXR streaming solution includes NVIDIA RTX™ hardware, NVIDIA RTX Virtual Workstation (vWS) drivers, and the CloudXR software development kit (SDK).

DGX

NVIDIA DGX™ systems deliver the world’s leading solutions for enterprise AI infrastructure at scale.

DOCA

Data Center Infrastructure-on-a-Chip Architecture

The NVIDIA® DOCA™ SDK (software development kit) enables developers to rapidly create applications and services on top of NVIDIA BlueField® data processing units (DPUs), leveraging industry-standard APIs.

With DOCA, program the data center infrastructure of tomorrow by creating high-performance, software-defined, cloud-native, DPU-accelerated services to address the increasing performance and security demands of modern data centers.

Riva

NVIDIA Riva is a GPU-accelerated SDK for building multimodal conversational AI applications that deliver real-time performance on GPUs.

Maxine

NVIDIA Maxine™ is a GPU-accelerated SDK with state-of-the-art AI features for developers to build virtual collaboration and content creation applications such as video conferencing and live streaming.

Maxine’s AI SDKs—Video Effects, Audio Effects, and Augmented Reality (AR)—are highly optimized and include modular features that can be chained into end-to-end pipelines to deliver the highest performance possible on GPUs, both on PCs and in data centers. Maxine can also be used with NVIDIA Riva, an SDK for building conversational AI applications, to offer world-class language-based capabilities such as transcription and translation.

Developers can add Maxine AI effects into their existing applications or develop new pipelines from scratch using NVIDIA DeepStream, an SDK for building intelligent video analytics, and NVIDIA Video Codec, an SDK for accelerated encode, decode, and transcode.

Merlin

NVIDIA Merlin™ is an open-source framework for building large-scale deep learning recommender systems.

Omniverse

NVIDIA Omniverse™ is a powerful multi-GPU real-time simulation and collaboration platform for 3D production pipelines based on Pixar's Universal Scene Description and NVIDIA RTX™technology.

Rivermax

Optimized networking SDK for Media and Data streaming applications.

NVIDIA® Rivermax® offers a unique IP-based solution for any media and data streaming use case. Rivermax together with NVIDIA GPU accelerated computing technologies unlocks innovation for a wide range of applications in Media and Entertainment (M&E), Broadcast, Healthcare, Smart Cities and more.

Rivermax leverages NVIDIA ConnectX® and BlueField DPU hardware streaming acceleration technology that enables direct data transfers to and from the GPU, delivering best-in-class throughput and latency with minimal CPU utilization for streaming workloads.

Rivermax is the only fully-virtualized streaming solution that complies with the stringent timing and traffic flow requirement of the SMPTE ST 2110-21 specification. Rivermax enables the future of cloud-based software defined broadcast.

TAO

NVIDIA Train, Adapt, and Optimize (TAO) is an AI-model-adaptation platform that simplifies and accelerates the creation of enterprise AI applications and services. By fine-tuning pretrained models with custom data through a UI-based, guided workflow, enterprises can produce highly accurate computer vision, speech, and language understanding models in hours rather than months, eliminating the need for large training runs and deep AI expertise.